Advanced Micro Devices, Inc. (NASDAQ:AMD) stock has forged a strong recovery this year, albeit still trailing the valuation of industry leader Nvidia Corporation (NVDA) as the latter continues to lead in the artificial intelligence, or AI, race. But AMD’s Q3 outperformance underscores momentum in the company’s upcoming step-up in its AI efforts with the launch of the MI300X accelerators.

Specifically, the data center segment has benefitted from the strong ramp of 4th Gen EPYC server processors during the third quarter as optimization headwinds in the industry start to normalize. This is largely in line with observations at primary cloud service providers (“CSPs”) – some of AMD’s largest customers – during the latest earnings season. And the segment’s outlook is further bolstered by the strong engagements with CSPs and key AI technology providers on the MI300 deployments so far, underscoring favorable market share gains for AMD as the product starts to ship later this year.

Coupled with a stabilizing PC demand environment, which has been reinforced by AMD’s latest slate of client CPU/GPU offerings and the rise of AI application developments, the chipmaker remains well-positioned to further narrow its valuation gap from the industry leader.

AI Monetization is Looking Stronger Than Ever

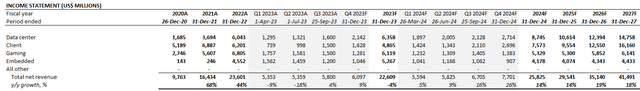

AMD reported Q3 data center revenue of $1.6 billion, which was flat y/y and up 21% sequentially in line with management’s earlier guidance. The results also track favorably towards market optimism for at least 50% growth in 2H23 from 1H23.

EPYC Series Server Processors

Much of the segment’s impressive results delivered in Q3 were driven by the continued ramp of the 4th Gen EPYC server processors. Recall that Genoa is one of the strongest general purpose server processors currently available in the market. The chip delivers almost double the performance and power efficiency in “enterprise and cloud applications” than its closest competition, making Genoa one of industry’s “most efficient server processors.” And with cloud optimization headwinds normalizing, adoption rates of the next-generation EPYC processors are expected to benefit from incremental recovery tailwinds going forward. Specifically, 4th Gen EPYC server processors grew by more than 50% sequentially in Q3, bolstered by continued expansion of deployments across hyperscalers. New Genoa deployments can now be found across more than 770 public cloud instances, up by 100 in Q3 from Q2, which tracks favorably towards management’s target of 900 by the end of 2023.

In addition, the Bergamo and Genoa-X variants have also benefitted from AI tailwinds given their tailored performance for facilitating complex workloads. Specifically, the Genoa-X is capable of more than 5x the performance of its predecessor in “technical computing workloads”, while Bergamo delivers almost double the performance of its closest competitor for “cloud-native applications”. This has accordingly bolstered the product’s appeal to tier 1 hyperscalers and key AI infrastructure providers, spanning Microsoft Azure (MSFT) and Meta Platforms (META), with strong ramp expected through the year.

And while the enterprise demand environment remains mixed, recent EPYC adoption trends are showing signs of gradual recovery. Key enterprise server providers spanning Dell Technologies (DELL), Hewlett Packard Enterprise Company (HPE), Lenovo Group Limited (OTPCK:LNVGY, OTCPK:LNVGF) and Super Micro Computer (SMCI) have launched Bergamo platforms in Q3. And although telco end markets remain soft, the leading enterprise server providers have also launched new platforms based on AMD’s Siena 4th Gen EPYC processor during Q3, which is optimized for communications infrastructure, retail and manufacturing use cases. This is in line with server providers’ acknowledgement of incremental upsides in demand fueled by emerging AI interest during the latest earnings season. Specifically, Dell – a key AMD customer – reported a $2+ billion order backlog on its servers optimized for AI use cases. HPE has also reported similar trends, indicating a high demand environment that is favorable to AMD enterprise sales in the near-term and complement its new data center technology ramp over the coming quarters.

Instinct MI Series Accelerators

The start of the show remains on the next-generation Instinct MI300 Accelerators. As discussed in our previous coverage on the stock, the Instinct accelerators remain a key area of AI monetization for AMD, as corroborated by strong AI cluster engagements with customers in recent quarters as they prepare for upcoming MI300 deployments at scale. Specifically, the MI300X, which is optimized for AI inferencing, is on track to initial customer shipments in the coming weeks. Meanwhile, the MI300A APUs for the El Capitan Exascale Supercomputer have already started production shipments earlier this month, progressing according to schedule.

The MI300X will be competitive in terms of performance, memory and power efficiency to industry leader Nvidia’s H100 accelerators. Based on AMD’s latest CDNA 3 architecture, which is capable of more than 5x the performance-per-watt compared to its predecessor for AI inferencing, the MI300X is expected to be “the world’s most advanced accelerator for generative AI.” Specifically, the MI300X offers competitive memory efficiency for training large language models and inferencing AI workloads with up to 192 GB of HBM3 memory support, addressing customers’ demand for scalability and competitive TCO. This makes AMD’s upcoming Instinct accelerators a close replacement for Nvidia’s H100 chips, which have been hard to come by in the supply-constrained environment spurred by the AI frenzy.

Looking ahead, the MI300 Series accelerators are likely to benefit from more evident share gains and monetization through AI use cases going into 2024. AMD has guided $400 million in data center GPU revenue contributions in Q4, with the figure to exceed $2 billion through 2024, making it the fastest product to surpass $1 billion sales in the company’s history.

Although AMD has marketed the MI300 series accelerators as the world’s most advanced, it has its fair share of close competitors, nonetheless. While the MI300X offers greater performance and efficiency than Nvidia’s H100 and Intel’s (INTC) Gaudi 2 currently available in the market, its technological competency already falls slightly behind Nvidia’s latest GH200 Superchip announced over the summer, which will start shipping in the latter half of 2024.

But considering Nvidia’s eye-watering growth from capitalizing on AI-driven demand with its H100 and A100 accelerator chips during the first half of the year, AMD’s upcoming MI300X is likely to be a strong share gainer heading into 2024 amidst the persistent industry tailwinds as well. And the chip’s upcoming launch is expected to be further complemented by robust deployment of 4th Gen EPYC systems in recent quarters, which the MI300X can leverage to drive competitive TCO and performance for end users.

Demand for the Instinct MI300 accelerators is also expected to be bolstered by AMD’s accommodating software with its ROCm platform, which has been further enhanced in recent quarters. In addition to support PyTorch, TensorFlow, Onyx, and OpenAI Triton to ensure a hardware-agnostic experience for customers, AMD has also acquired Mipsology and Nod.ai in Q3 to further ROCm’s AI capabilities.

Emerging PC Recovery Tailwinds

In addition to AI monetization in the data center segment, AMD’s client segment sales have also started to pickup in Q3 as expected. The client segment reported Q3 revenue of $1.5 billion, returning to y/y growth of 47% with sequential growth of 50%, while also restoring operating profitability. The results are largely in line with market expectations that the PC downturn observed over the past year has troughed, though the near-term recovery outlook remains mixed. On the plus side, market expects a seasonal tailwind coming up, alongside an anticipated upgrade cycle driven from increased PC performance demands to support AI applications, ranging from web-based solutions like OpenAI’s ChatGPT to app-based software like Microsoft Office 365 Copilot launching later this week. However, near-term softness in the consumer spending environment remains a lingering downside for demand, which is in line with continued, albeit slowed, declines in Q3 PC shipments.

AMD is likely to benefit from a stronger recovery heading into 2024, as the anticipated upgrade cycle for PCs, buoyed by aging purchases made during the pandemic era and AI demands, gains momentum alongside an easier prior year compare. The anticipated upgrade cycle is likely to be further corroborated by AMD’s latest slate of client computing hardware, spanning the Ryzen 7000 Series CPUs and Radeon RX 7000 Series GPUs – both of which are based on AMD’s newest Zen 4 and RDNA 3 architectures, respectively.

Specifically, AMD anticipates a slate of more than 100 new commercial PC platforms based on its latest technologies launching before the end of the year from notable PC makers such as HP Inc. (HPQ) and Lenovo. Both of the company’s latest client computing products are fitted with AI competencies, underscoring its comprehensive monetization roadmap for the nascent technology that spans across hyperscaler infrastructure, commercial and consumer applications.

The Ryzen Pro 7040 mobile CPUs, for example, is expected to maintain leadership enterprise-grade performance and energy efficiency by being the first to incorporate AMD’s proprietary “AMD Ryzen AI” technology – industry’s first integrated AI engine for Windows workstations based on Intel’s x86 chip design. The chipmaker has also recently announced the latest Ryzen Threadripper PRO 7000 WX-Series CPUs for commercial desktop workstations. The latest technology is expected to ship with the latest high-end desktops rolling out from leading OEMs counting Dell, HP and Lenovo – including “AI-ready workstations” – which will further reinforce AMD’s client demand environment ahead of an anticipated upgrade cycle.

Meanwhile, the latest Radeon RX 7900 is also expected to deliver as much as 7% better performance than its key competitor, Nvidia’s GeForce RTX 4080 GPU. The Radeon RX 7000 Series GPUs are also capable of more than double the AI performance compared to its predecessor, and is equipped with AMD’s next-generation “AMD Radiance Display” and “AMD Radeon Rays” ray-tracing technology based on the latest RDNA 3 architecture. This is likely to encourage an emerging upgrade cycle in gaming use cases as well, in addition to high-end desktop workstations, and potentially lift the segment out of its long-running streak of quarterly declines.

Fundamental and Valuation Considerations

Taken together, AMD is well-positioned to benefit from emerging strength in both its client and data center segments, helped by the combination of growing recovery tailwinds and AI momentum. But this is likely to be partially offset by an anticipated slowdown in the embedded segment. After six consecutive quarters of robust growth since Xilinx’s integration, the segment’s growth has started to normalize alongside incremental macroeconomic impacts that have stifled demand from the key communications infrastructure vertical. This trend is corroborated by Q3’s revenue decline, which management expects to last through at least the first half of 2024 as customers digest their inventory builds.

Lingering regulatory uncertainties over AMD’s footprint in China also remains an overhanging risk. Specifically, the U.S. government has recently expanded the controlled threshold underpinning the curbs on advanced semiconductor technology exports to China. While this is expected to levy an impact AMD’s performance, considering it generates about a fifth of its revenue from the Chinese market, the anticipated damage on near-term sales – particularly pertaining to the EPYC processors and Instinct accelerators – is not expected to be material. As discussed in one of the earlier sections, the demand environment for AI accelerators remain supply constrained, while the core market for AMD’s latest EPYC processors remains in the U.S. This is expected to provide AMD some time to find remediation and compensate for the anticipated longer-term impact on the demand environment for high performance chips in the Chinese market going forward. Meanwhile, the company also generates a large part of its China revenue from the sale of lower performance chips for client and commercial infrastructure applications, which mitigates some of the growth impact from the export restrictions.

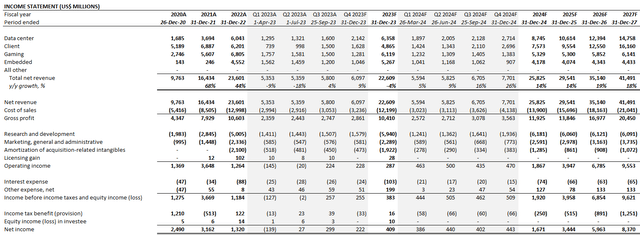

As a result, our updated fundamental forecast, adjusted for AMD’s actual Q3 performance and forward outlook as discussed in the foregoing analysis, estimates revenue decline of 4% y/y to $22.6 billion for 2023. Specifically, the data center segment is expected to be the key growth driver over coming quarters, re-emerging with strength through 2024 after coming off of the slowdown observed earlier this year due to cloud spend optimization headwinds. Much of the segment’s anticipated growth is expected to be fueled with the ramp of 4th Gen EPYC processors alongside new system deployments with the Instinct MI300X and MI300A accelerators heading into 2024.

Author

On the cost front, we expect limited margin expansion in the current quarter. Recall that the embedded segment is one of AMD’s most profitable segments, with an operating margin of more than 50%. As related sales start to temper, the ensuing impact on profitability will likely need to be compensated by the implementation of incremental internal cost efficiencies instead, thus limiting the near-term pace of margin expansion. However, the accelerated ramp of AMD’s AI processors expected in the new year is likely to drive incremental margin expansion with scale and levy a greater tailwind on the chipmaker’s profitability prospects going forward.

Author

AMD_-_Forecasted_Financial_Information.pdf

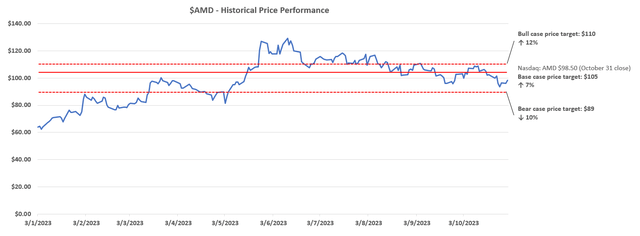

Admittedly, the broader semiconductor sector has benefitted from a robust valuation premium to the broader market this year, helped primarily by is benefit from AI-driven total addressable market (“TAM”) expansion prospects in recent quarters which have overshadowed the industry’s cyclical downturn. Yet AMD’s valuation continues to lag industry leader Nvidia’s by a wide margin, even on a relative basis considering both of their growth and earnings prospects.

Author

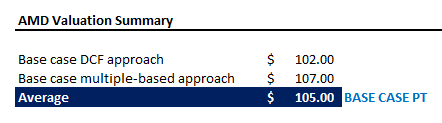

Our base case price target for AMD is $105. The price target equally weighs results from the discounted cash flow and multiple-based valuation approach.

Author

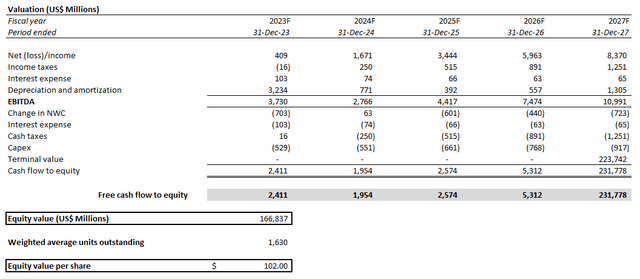

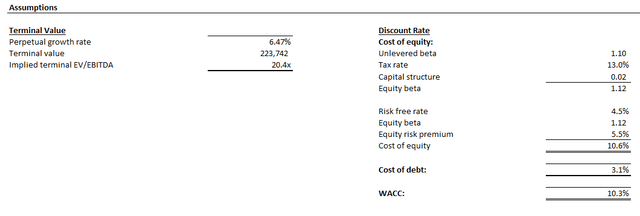

The discounted cash flow (“DCF”) approach considers projected cash flows in conjunction with the base case fundamental analysis discussed in the earlier section. A 10.3% WACC in line with AMD’s risk profile is applied, alongside a perpetual growth rate of 6.5% on projected 2027 EBITDA in line with AI-driven TAM expansion opportunities ahead. The perpetual growth rate applied is equivalent to 3.5% on projected 2032 EBITDA when AMD’s growth profile is expected to normalize in line with the pace of estimated economic expansion across its core operating regions.

Author Author

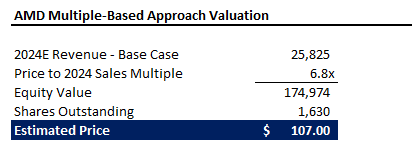

Meanwhile, the multiple-based approach considers 6.8x 2024 sales, which pulls the stock’s current valuation multiple closer to a premium over the industry average (4.7x) that is in line with AMD’s relative growth prospects. We believe AMD is at least deserving of this premium, given its positioning as one of the leading market share gainers in emerging AI opportunities, especially with its upcoming shipments of the MI300 accelerators.

Author

The Bottom Line

AMD’s Q3 outperformance, particularly in the data center segment, reinforces its AI monetization roadmap ahead. This marks an inflection from its previously slow start on capitalizing the related opportunities earlier this year when compared to key rival Nvidia, which led to their widening valuation divergence in recent months. Taken together with gradual recovery tailwinds in the client segment, alongside anticipated margin expansion driven by scale to bolster AMD’s balance sheet heading into the new year, the stock is well-positioned to further narrow its valuation gap from the industry leader.

Editor’s Note: This article discusses one or more securities that do not trade on a major U.S. exchange. Please be aware of the risks associated with these stocks.

Read the full article here